Johannes Grohmann

Dr. rer. nat. Johannes Grohmann

Chair of Software Engineering (Informatik II)

Department of Computer Science

University of Würzburg

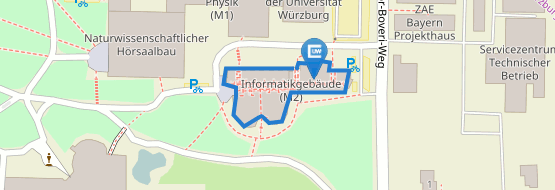

Am Hubland, 97074 Würzburg

Informatikgebäude, 1.OG, Room A102

Phone: +49 (931) 31 86875

johannes.grohmann@uni-wuerzburg.de

ORCID: 0000-0001-7960-0823 ![]()

Google Scholar

dblp

Research Gate

Research Interests

- Self-Aware Computing & Machine Learning

- Performance Modeling of Software Systems

- Failure Prediction and Analysis in Microservice Architectures

- Design and Evaluation of Serverless Applications

Selected Publications:

-

Model Learning for Performance Prediction of Cloud-native Microservice Applications. Thesis; Universität Würzburg. (2022, March).

Model Learning for Performance Prediction of Cloud-native Microservice Applications. Thesis; Universität Würzburg. (2022, March). -

SARDE: A Framework for Continuous and Self-Adaptive Resource Demand Estimation. in ACM Transactions on Autonomous and Adaptive Systems (2021). 15(2)

SARDE: A Framework for Continuous and Self-Adaptive Resource Demand Estimation. in ACM Transactions on Autonomous and Adaptive Systems (2021). 15(2) -

SuanMing: Explainable Prediction of Performance Degradations in Microservice Applications. in Proceedings of the 12th ACM/SPEC International Conference on Performance Engineering (ICPE) (2021).

SuanMing: Explainable Prediction of Performance Degradations in Microservice Applications. in Proceedings of the 12th ACM/SPEC International Conference on Performance Engineering (ICPE) (2021). -

Baloo: Measuring and Modeling the Performance Configurations of Distributed DBMS. in 2020 28th International Symposium on Modeling, Analysis, and Simulation of Computer and Telecommunication Systems (MASCOTS) (2020). 1–8.

Baloo: Measuring and Modeling the Performance Configurations of Distributed DBMS. in 2020 28th International Symposium on Modeling, Analysis, and Simulation of Computer and Telecommunication Systems (MASCOTS) (2020). 1–8. -

Monitorless: Predicting Performance Degradation in Cloud Applications with Machine Learning. in Proceedings of the 20th International Middleware Conference (2019). 149–162.

Monitorless: Predicting Performance Degradation in Cloud Applications with Machine Learning. in Proceedings of the 20th International Middleware Conference (2019). 149–162.

Community Activities

- Member SPEC Research Group "DevOps Performance" since Januar 2017

- Member SPEC Research Group "Cloud" since April 2018

- PC Member Symposium on Software Performance (SSP 2019, 2020)

- PC Member Poster Track International Conference on Performance Engineering (ICPE 2018, 2020)

- Election Officer SPEC Research Group 2018, 2019

- Participation Software Engineering for Intelligent and Autonomous Systems (SEfIAS) (GI-Dagstuhl Seminar 18343, 2018)

Teaching

- Lecture and exercise coordination Softwaretechnik (Software engineering)

- Student supervision Softwarepraktikum (Practical course on software engineering)

- Student supervision Softwareengineering Seminar (Seminar on software engineering)

- Supervision of students Bachelor's and Master's thesis

Curriculum Vitae

Occupation:

| Sep 2017 - Nov 2017 | Research Intern at Nokia Bell Labs, Dublin, Ireland |

| since Janaury 2017 | Research Assistant at the Chair of Software-Engineering (Computer Science II) headed by Prof. Samuel Kounev, University of Würzburg, Germany |

Dec 2012 - Mar 2016 | Multiple Occupations as Student Researcher at the Institute of Computer Science, the Institute of Mathematics, and the Service Center for Innovation in Teaching and Learning, University of Würzburg |

| Feb 2014 - Mar 2014 | Bachelor's degree candidate at PASS Consulting Group, Aschaffenburg, Germany |

| Aug 2013 - Sep 2013 | Internship at PASS Consulting Group, Aschaffenburg, Germany |

Education:

| Jul 2014 - Oct 2016 | Master of Science in Computer Science at the University of Würzburg |

| Jan 2015 - Jun 2015 | Semester Abroad at the Blekinge Institute of Technology (BTH) in Karlskrona, Sweden |

Oct 2011 - Jun 2014 | Bachelor of Science in Computer Science at the University of Würzburg |